template<class T>

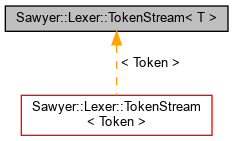

class Sawyer::Lexer::TokenStream< T >

An ordered list of tokens scanned from input.

A token stream is an ordered list of tokens scanned from an unchanging input stream and consumed in the order they're produced.

Definition at line 91 of file Lexer.h.

|

|

| TokenStream (const boost::filesystem::path &fileName) |

| | Create a token stream from the contents of a file.

|

| |

| | TokenStream (const std::string &inputString) |

| | Create a token stream from a string. More...

|

| |

| | TokenStream (const Container::Buffer< size_t, char >::Ptr &buffer) |

| | Create a token stream from a buffer. More...

|

| |

|

const std::string & | name () const |

| | Property: Name of stream.

|

| |

| const Token & | current () |

| | Return the current token. More...

|

| |

| bool | atEof () |

| | Returns true if the stream is at the end. More...

|

| |

| const Token & | operator[] (size_t lookahead) |

| | Return the current or future token. More...

|

| |

| void | consume (size_t n=1) |

| | Consume some tokens. More...

|

| |

| std::pair< size_t, size_t > | location (size_t position) |

| | Return the line number and offset for an input position. More...

|

| |

|

std::pair< size_t, size_t > | locationEof () |

| | Returns the last line index and character offset.

|

| |

|

std::string | lineString (size_t lineIdx) |

| | Return the entire string for some line index.

|

| |

| virtual Token | scanNextToken (const Container::LineVector &content, size_t &at)=0 |

| | Function that obtains the next token. More...

|

| |

|

| std::string | lexeme (const Token &t) |

| | Return the lexeme for a token. More...

|

| |

| std::string | lexeme () |

| | Return the lexeme for a token. More...

|

| |

|

| bool | isa (const Token &t, typename Token::TokenEnum type) |

| | Determine whether token is a specific type. More...

|

| |

| bool | isa (typename Token::TokenEnum type) |

| | Determine whether token is a specific type. More...

|

| |

|

| bool | match (const Token &t, const char *s) |

| | Determine whether a token matches a string. More...

|

| |

| bool | match (const char *s) |

| | Determine whether a token matches a string. More...

|

| |

Return the lexeme for a token.

Consults the input stream to obtain the lexeme for the specified token and converts that part of the stream to a string which is returned. The lexeme for an EOF token is an empty string, although other tokens might also have empty lexemes. One may query the lexeme for any token regardless of whether it's been consumed; in fact, one can even query lexemes for tokens that have never even been seen by the token stream.

The no-argument version returns the lexeme of the current token.

If you're trying to build a fast lexical analyzer, don't call this function to compare a lexeme against some known string. Instead, use match, which doesn't require copying.

Definition at line 182 of file Lexer.h.

Return the lexeme for a token.

Consults the input stream to obtain the lexeme for the specified token and converts that part of the stream to a string which is returned. The lexeme for an EOF token is an empty string, although other tokens might also have empty lexemes. One may query the lexeme for any token regardless of whether it's been consumed; in fact, one can even query lexemes for tokens that have never even been seen by the token stream.

The no-argument version returns the lexeme of the current token.

If you're trying to build a fast lexical analyzer, don't call this function to compare a lexeme against some known string. Instead, use match, which doesn't require copying.

Definition at line 189 of file Lexer.h.

Determine whether a token matches a string.

Compares the specified string to a token's lexeme and returns true if they are the same. This is faster than obtaining the lexeme from a token and comparing to a string since there's no string copying involved with this function.

The no-argument version compares the string with the current tokens' lexeme.

Definition at line 217 of file Lexer.h.

Determine whether a token matches a string.

Compares the specified string to a token's lexeme and returns true if they are the same. This is faster than obtaining the lexeme from a token and comparing to a string since there's no string copying involved with this function.

The no-argument version compares the string with the current tokens' lexeme.

Definition at line 226 of file Lexer.h.

1.8.17

1.8.17