Project #1: OpenMP Loop Scheduling Visualization

In this project you will be working with LLVM OpenMP runtime library, which is also used in the commercial Intel compiler. The runtime is the part of the OpenMP implementation that your code is linked with, and that manages execution of an OpenMP program by multiple threads. When a compiler encounters an OpenMP directive, it generates code that makes calls to the runtime library. The compiler outlines sections of code that are to run in parallel into separate functions that can then be invoked in multiple threads. For instance, simple code like this

void foo()

{

#pragma omp parallel

{

... do something ...

}

}

is converted into something that looks conceptually like this

static void outlinedFooBody()

{

... do something ...

}

void foo()

{

__kmpc_fork_call(outlinedFooBody, (void*)0, …); // Not the real function name!

}

In the worksharing construct, e.g. #pragma omp parallel for, iterations are executed in parallel by threads. They are distributed across threads according to the schedule clause, e.g. schedule(kind[, chunk_size]). The schedule clause can be specified by the following kinds: static, dynamic, guided, auto and runtime, where each kind distributes iterations among threads differently.

The goal of this project is to visualize the distribution of iterations to each thread by creating a graph. You will need to modify the OpenMP runtime source codes that handle the distribution of iterations and have the runtime to store loop distribution information to memory buffer. By the time the execution finishes, loop distribution info stored in the memory buffer is dumped to a text file in DOT format, which can be visualized using DOT tool.

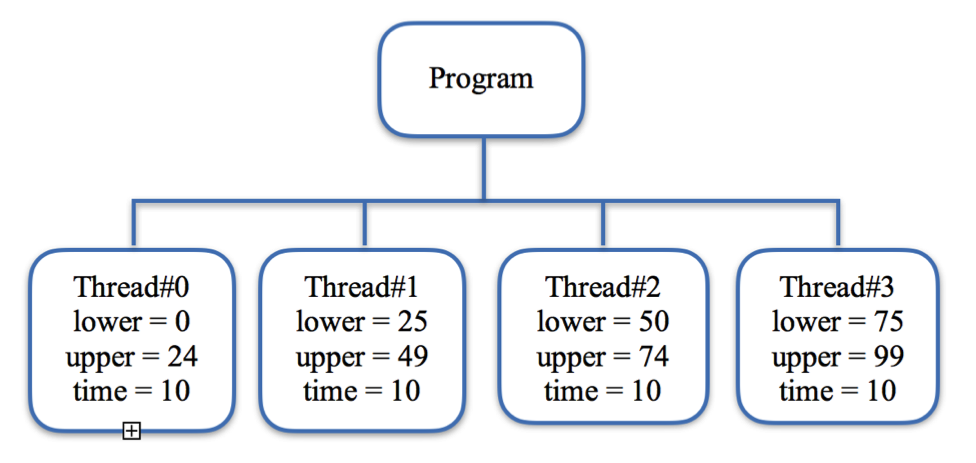

For instance, with static scheduling the number of iterations is evenly distributed among all OpenMP threads. If we have 4 threads and 100 iterations, then each thread will execute 25 iterations and the graph would be something similar as the following:

Development Setup

Development

- You mainly need to study and modify two files:

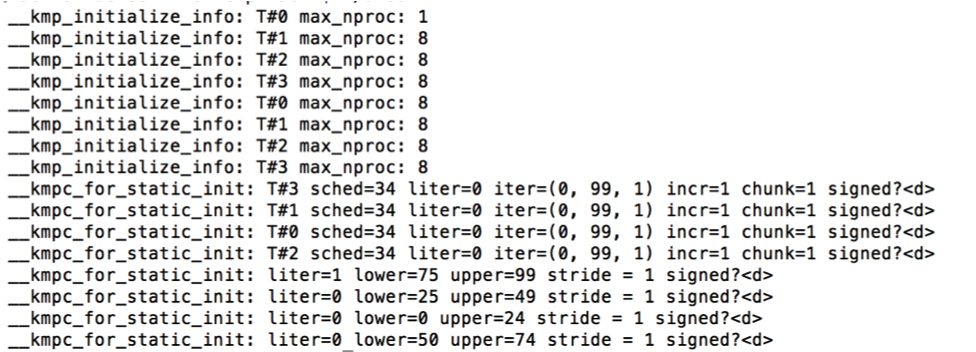

kmp_sched.cppandkmp_dispatch.cpp.kmp_sched.cppprovides functions (mainly__kmp_for_static_initfunction, also see manual 5.8.3.24, page #35 ) for supporting static schedule of worksharing loops andkmp_dispatch.cppprovides functions (mainly__kmpc_dispatch_initand__kmp_dispatch_nextfunctions, also see manual 5.8.3.6 and 5.8.3.10, page #32) for dynamic scheduling of worksharing loops.

About runtime tracing

The runtime tracing, when being turned on, prints out tracing information of task execution that can help you understand the work-stealing algorithm. To enable tracing, you need to set KMP_A_DEBUG=<n> where n is necessary level of trace messages. For example, to get messages with level <=20: set KMP_A_DEBUG=20. See below:

Schedule and submission in each phase (submit from Moodle):

| Phase | Deadline | Tasks | Submission |

|---|---|---|---|

| 1 | 03/17 | OpenMP runtime study, motivations, and design of the implementation. In the report, you will describe OpenMP runtime architecture, the motivation and design of the schedule graph. | Written report. |

| 2 | 03/27 | Initial graph generation for static and dynamic with chunk sizes; and auto and guided with chunk sizes | Implementation, doc and evaluation report |

| 3 | 04/17 | Refine graph generation to include more information such as timing, and CPU counter info by using PAPI interface. Project presentation. | Implementation, doc, evaluation report and presentation |

| 4 | 04/24 | Final project report due. | Modified source files, final report and presentation |

Resources:

- DOT graph format, DOT visualization: xdot on Linux

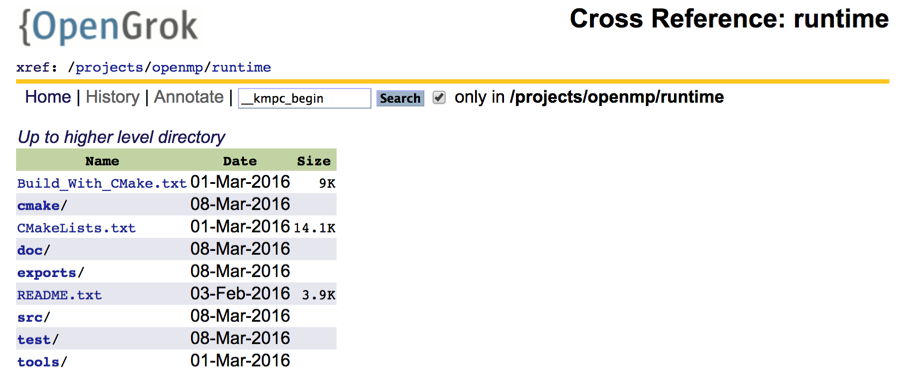

- Read the source code from web browswer with cross-link and search(need VPN to access from off-campus): http://orion.ec.oakland.edu:8080/llvm/xref/projects/openmp/runtime/. E.g.

Sections in your report:

- Introduction (introduce the background, motivation and contribution of the work)

- Motivation and Proposed Solution

- Implementation: which discusses how Intel OpenMP runtime works that are relevant to what you are doing, how do you implement, and the challenge and solution you faced while implementing the proposed solution.

- Experimental Results: show off your work using real results or figures.

- Related Work: discuss the similarity and differences of yours with others and why yours are better

- Conclusion: conclusion, implication and future work

- References

Your submission should include everything in one zipped package: the report in editable form, presentation slide and sources code/makefile, and a file that provides a summary of your changes to the original Intel OpenMP runtime.